2020.06.11

Curious Exploration in Reinforcement Learning via Skill-based Rewards

SOKENDAI Publication Grant for Research Papers program year: 2019

Informatics Nicolas Bougie

情報学コース

Skill-based curiosity for intrinsically motivated reinforcement learning

journal: Machine Learning Journal publish year: 2019

DOI: 10.1007/s10994-019-05845-8

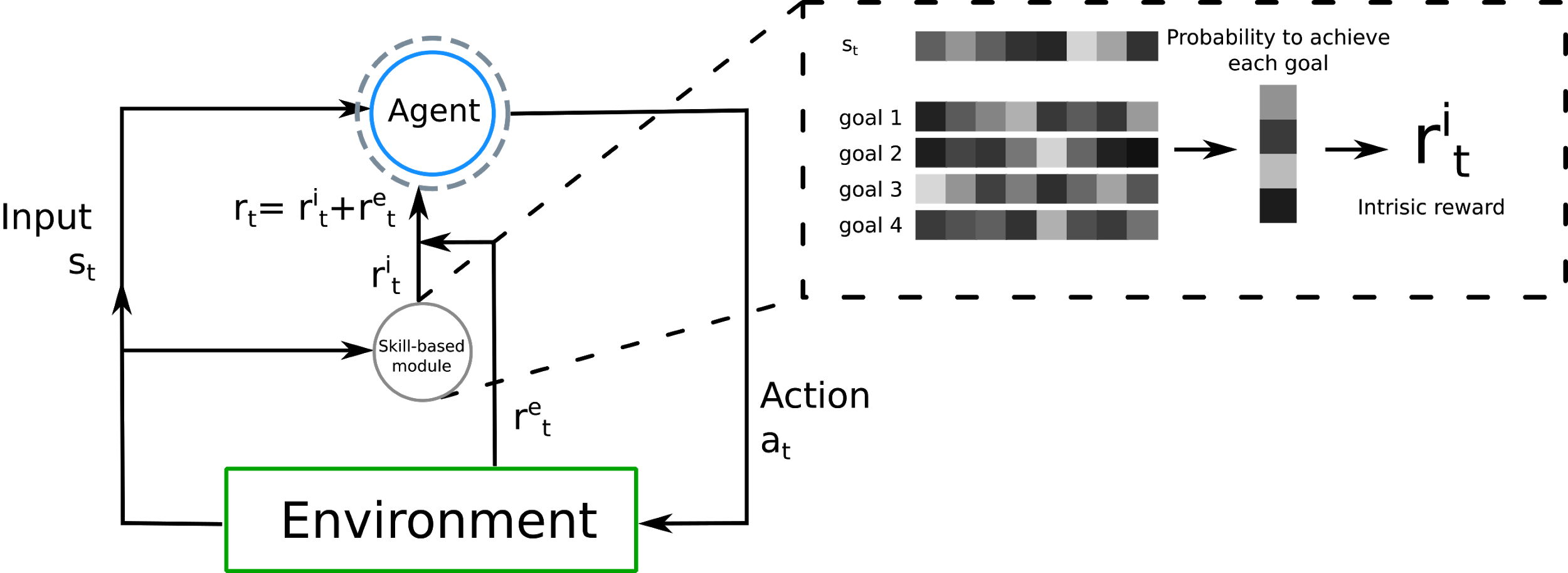

In a state st, the agent interacts with the environment. It selects an action at and receives a task reward ret and an intrinsic reward rit. The intrinsic reward is generated by the skill-based module (dashed line box). Given the current state st and a set of goals, it generates an intrinsic reward based on the probability to achieve these goals. Each goal represents a particular state that the agent aims to reach.

Summary

Reinforcement learning (RL) provides a formalism for learning policies by interacting with an environment. At each time-step, the agent (i.e. the entity interacting with the environment) receives an observation and chooses an action. It gets a feedback from the environment called a reward. Traditionally, RL aims to learn an optimal policy that maximizes cumulative rewards; it may be the distance to the goal or specifically designed for the task. RL has achieved many accomplishments in a wide variety of application domains such as game playing (e.g. Go, Starcraft), or even recommendation systems.

However, one key constraint for them is the need for well-designed and dense rewards. Hand-crafting such reward functions is a challenging engineering problem. As a result, many real-world scenarios such as robot control involve sparse or poorly defined rewards - zero for most of the time. Therefore, applying reinforcement learning in such tasks remains highly challenging.

Inspired by curious behaviors in animals, a solution is to let the agent develop its own intrinsic rewards. By combining such exploration bonus with task rewards, it can enable RL to achieve human-performance in a variety of tasks. We propose a novel formulation of curiosity based on the ability of the agent to achieve multiple goals during training. The goals are automatically generated based on the states visited by our agent. By estimating the likelihood to achieve the goals, we can reward the hard-to-learn regions of the environment. As a result, our approach gives more importance to states depending on their complexity.

We evaluate our method in different environments including Atari arcade learning environment or Mujoco. The former is a set of games with complex dynamics and hard to explore. The latter consists of different robotic tasks such as "learning to grab an object". We found that curiosity is crucial to learn efficient exploration strategies in sparse tasks. Our method achieved state of the art in several Atari games. We also show that our agents can explore in the absence of task reward. In the future, we hope to test this method on real robots.

Title of the journal: Machine Learning Journal

Publication date: 10 October 2019

Title of the paper: Skill-based curiosity for intrinsically motivated reinforcement learning

Names of the authors: Nicolas Bougie and Ryutaro Ichise

URL1: https://doi.org/10.1007/s10994-019-05845-8

URL2: https://link.springer.com/article/10.1007/s10994-019-05845-8

Nicolas Bougie, Department of Informatics